Beyond Chatbots: How Model Context Protocol Accelerates AI Agent Capabilities

By Urmila Raju

Welcome to AWSCQ.

For this edition we’re delighted to welcome the brilliant Urmila Raju as our Guest Editor.

Urmila s a Senior Solutions Architect at AWS, specializing in Capital Markets industry, and helping customers modernize and innovate on AWS.

Based in London, she brings deep expertise in cloud architecture, financial services, and emerging technologies including AI and serverless computing.

Urmila is an active member of the AWS community — Many of you may have seen her speaking at Manchester Comsum in September delivering her talk Next-Generation AWS Step Functions: AI Integration and Advanced Orchestration.

So without further ado let’s hand over to Urmila!

I have a confession: I’ve spent more time this year talking to my AI chat assistant (Amazon Q, in my case) than talking with my team.

Amazon Q, GitHub Copilot, Claude—they’re brilliant at answering questions and generating code. But the moment you need them to take actions — like check a log, update a Lambda function, verify an alarm in your AWS account—we’re back to the AWS console.

Here’s the thing: that friction is disappearing. Bringing agentic AI capabilities to your chatbots, and augmenting them with open protocols like Model Context Protocol (MCP) and Agent-to-Agent (A2A), is quietly revolutionizing how AI agents interact with AWS services and external tools. We’re moving from “AI that suggests” to “AI that executes”. If you’ve been building serverless applications, deploying containers, or managing cloud operations, this matters. A lot.

In this post, I want to focus on how to use suitable AI agents based on your user personas and make your AI agents context-aware using MCP servers.

So, what is an MCP Server?

An MCP Server is a lightweight program that exposes specific capabilities through the standardized Model Context Protocol. Host applications (such as chatbots, IDEs, and other AI tools) have MCP clients that maintain 1:1 connections with MCP servers. Common MCP clients include agentic AI coding assistants like Q Developer, Cline, Cursor, and Windsurf, as well as chatbot applications like Claude Desktop. MCP servers access local data sources and remote services to provide additional context that improves model outputs.

For a deeper dive into MCP fundamentals, check out Unlocking the power of Model Context Protocol (MCP) on AWS.

Why AWS MCP Servers Matter

First, let’s see how software development and deployment in AWS can be accelerated by the use of AWS MCP servers.

AWS MCP servers bridge the gap between what foundation models know and what AWS practitioners need, delivering accurate technical details, current documentation, and workflow automation that turns AI assistants into reliable cloud development partners.

A Real-World Example

Your finance team notices Lambda costs have spiked this month and asks the platform team to rationalize it. Traditionally, you’d dive into Cost Explorer and Cost and Usage Reports, drilling down manually. With AWS MCP servers, the experience is massively improved. An example below, using the AWS Cost Explorer MCP server with Amazon Q CLI.

Prompt1:

Response1:

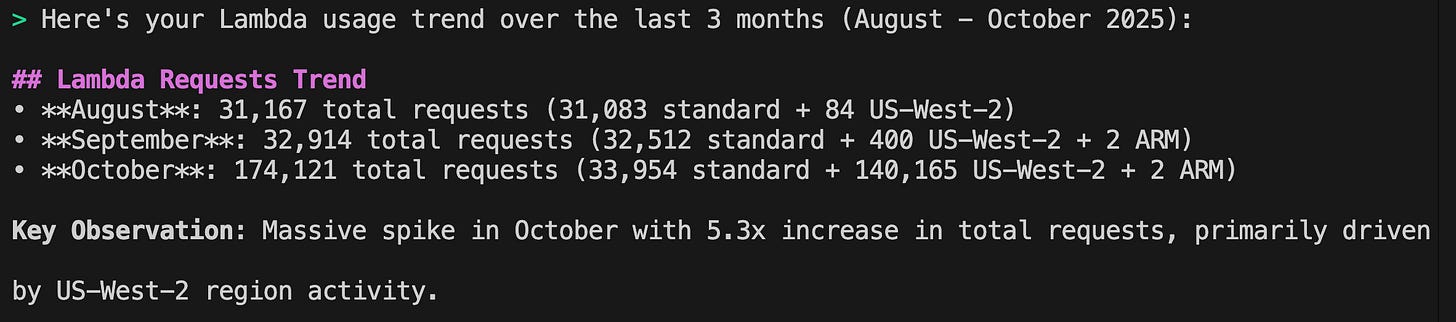

Prompt2:

Response2:

With just two questions, you can narrow down to the issue, dramatically reducing troubleshooting time.

User personas like Developers, Infrastructure and Platform Engineers, Data Analysts, Architects, DevOps, and CloudOps can all benefit from the wide range of AWS MCP servers.

Choosing the Right Tools: Avoiding the “Too Many Cooks” Problem

When using multiple MCP servers, Large Language Models (LLMs) may choose tools from any available server without consideration for which is most appropriate. MCP has no built-in orchestration or enforcement mechanisms at this time—LLMs can use any tool from any server at will.

Common Tool Selection Conflicts

Multiple Infrastructure MCP Servers: For example - Using Cloud Control API MCP server alongside other MCP servers that perform similar functions (such as Terraform MCP, CDK MCP, CFN MCP) may cause LLMs to randomly choose between them.

Built-in Tools: LLMs may choose built-in tools instead of MCP server tools. For example, Amazon Q Developer CLI has use_aws, execute_bash, fs_read, and fs_write built in.

The Solution: Use custom agents augmented with only necessary tools. This blog post explains with an example of “front-end” and “back-end” agents, each with specific MCP servers, can be customized for two different developer personas. Think of it as giving each agent a focused toolbox rather than access to the entire workshop.

Security First: Defining Permissions for Your Agents

The MCP client in your AI assistant connects to your AWS MCP servers using the AWS_PROFILE of the user. So, the IAM permissions of the user define the type of operations that the MCP server and its tools can perform on your AWS environment.

Example Scenario

If you’re a developer with an AWS profile configured for your DEV account and have READ-only access to resources, then a user query like “create S3 bucket named xyz” will fail—even though you have the AWS Cloud Control API MCP server configured (which should create the resource, provided you had the right IAM permissions).

The takeaway? It’s critical to define the security boundary of your agents and their tools with fine-grained IAM permissions and resource-level policies where required.

Example MCP Configuration

Here’s what goes into your ~/.aws/amazonq/mcp.json:

Transport Modes: stdio vs. Streamable HTTP – A key consideration for MCP client-server communication

An MCP client can connect to an MCP server over two transport modes: stdio and streamable HTTP.

Use stdio for local, single-user applications like command-line tools and desktop apps where a client runs as a subprocess of the server. It offers minimal latency and simplicity.

Use Streamable HTTP for remote, networked, and multi-user applications. It allows clients to connect to a server over the web, supporting features like scalability, high availability, and standard web authentication.

Current State of AWS MCP Servers

As of this writing, most AWS MCP servers support stdio mode only, making them suitable for AI assistants like Cursor, Q CLI, or Claude Desktop, which have MCP clients that can establish one-to-one connections through stdio.

But if you have a use case for a custom chat app with custom agents that needs multiple HTTP connections to MCP servers, you’ll need a wrapper (for example, a Lambda function) to connect to the stdio MCP server through streamable HTTP.

Reference: Run Model Context Protocol servers with AWS Lambda

When do you need Custom Agents and MCP Servers for your Business?

So far, we’ve discussed AI agents in chat assistants acting as “productivity tools” for internal users who build end-user products. But what about bringing AI agents to your end users—customers of a bank, shoppers of an online grocery store, visitors to e-commerce websites?

The real power of AI transformation for your end-user customer journeys lies in building bridges between existing services and workflows and your AI agents.

Example:

If a bank customer asks, “Did any of my transactions fail last month?” in a chat app, the AI agent should provide context from the customer’s transaction history to the LLM to get an accurate response. getTransactionHistory might be an existing service, so the bridge here is to build a custom MCP server to expose getTransactionHistory as a tool to the AI agent.

Shameless plug here: I delivered a session at AWS Community Summit 2025 that puts AWS Step Functions at the forefront, but the bigger idea is to showcase building custom agents and MCP servers at scale and integrating them with existing workflows (like Step Functions) through MCP servers. It covers a banking chat app example powered by AI agents and custom MCP servers.

Watch it here: Building AI Agents and augment Step Functions as MCP tools

Getting Started with Strands

There are various frameworks to build AI agents and MCP servers, and Strands is the open-source framework from AWS that helps you get started easily.

A variety of use cases and code samples using the Strands framework can be found here: Strands Agent Samples

A very interesting talk from Aaron Walker at AWS Community Summit 2025 - Building Cloud-Native AI Agents with Strands

Are we ready to build production grade AI Agents

The latest McKinsey Global Survey on the state of AI reveals a landscape defined by both wider use—including growing proliferation of agentic AI—and stubborn growing pains, with the transition from pilots to scaled impact remaining a work in progress at most organizations.

Building an AI agent that can handle a real-life use case in production is a complex undertaking. Although creating a proof of concept demonstrates potential, moving to production requires addressing scalability, security, observability, and operational concerns that don’t surface in development environments.

The AWS Answer: Amazon Bedrock AgentCore

Amazon Bedrock AgentCore is a comprehensive suite of services designed to help you build, deploy, and scale agentic AI applications. It helps you transition your agentic applications from experimental proof of concept to production-ready systems.

The suite includes:

AgentCore Runtime for secure agent deployment and scaling

AgentCore Gateway for enterprise tool development

AgentCore Identity for securing agentic AI at scale

AgentCore Memory for building context-aware agents

AgentCore Code Interpreter for code execution

AgentCore Browser Tool for web interaction

AgentCore Observability for transparency on your agent behavior

Amazon Bedrock AgentCore is promising and worth a separate newsletter, but if you’re getting started, I’d suggest exploring AgentCore Runtime and AgentCore Gateway first.

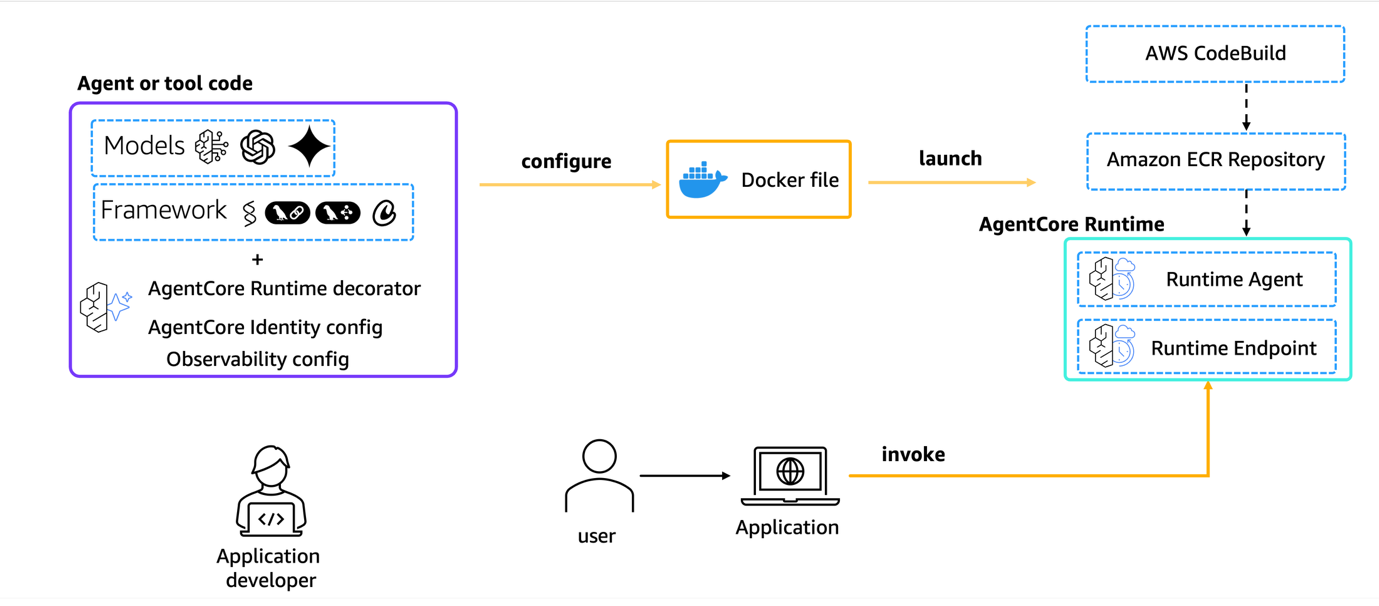

AgentCore Runtime: Purpose-Built for AI Agents

Amazon Bedrock AgentCore Runtime provides a secure, serverless hosting environment specifically designed for AI agents and tools. Traditional application hosting systems weren’t built for the unique characteristics of agent workloads—variable execution times, stateful interactions, and complex security requirements. AgentCore Runtime was purpose-built for these needs.

You can host your agents (built on frameworks like Strands, LangGraph, CrewAI, etc.) and your MCP servers on the Runtime.

Mental Model:

Amazon Bedrock AgentCore Python SDK provides a lightweight wrapper that helps you deploy your agent functions as HTTP services that are compatible with Amazon Bedrock. It handles all the HTTP server details so you can focus on your agent’s core functionality.

Once the agent is launched to AgentCore Runtime, your application can invoke it using the SDK or any of AWS’s developer tools such as boto3, AWS SDK for JavaScript, or AWS SDK for Java.

You can also host MCP servers on AgentCore Runtime, but using Streamable HTTP transport mode only. Note: This ties back to my point earlier, that ‘stdio’ mcp servers cannot be hosted on AgentCore at this point of time.

Reference: Hosting MCP Server Tutorial

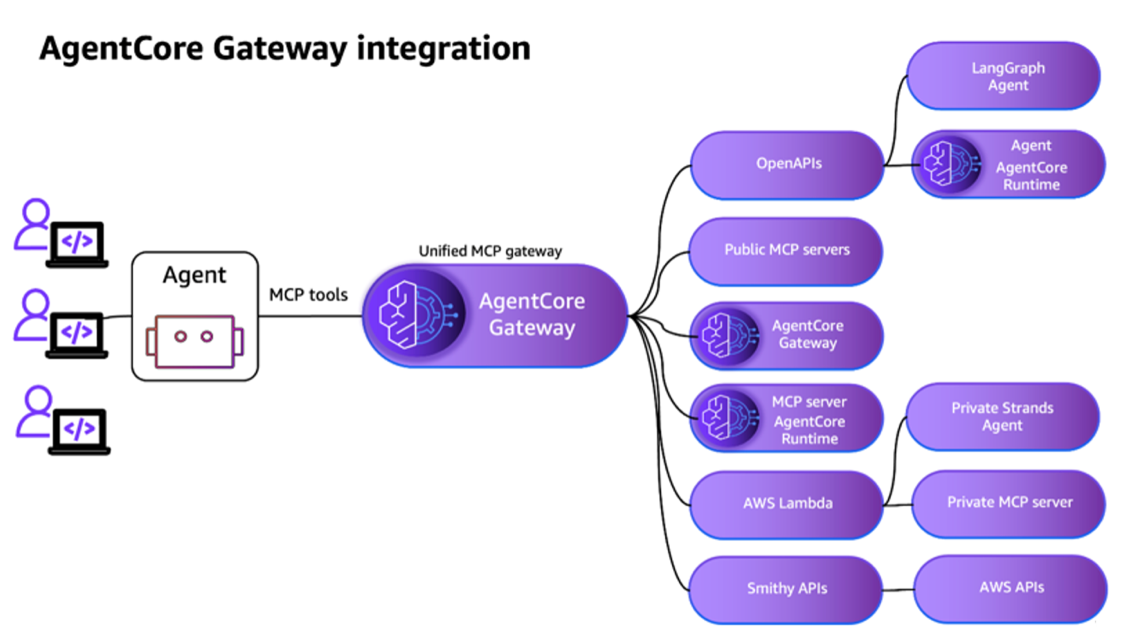

AgentCore Gateway: Your Central Tool Server

Amazon Bedrock AgentCore Gateway is a fully managed service that revolutionizes how enterprises connect AI agents with tools and services. As AI agents are adopted at scale, developer teams can create dozens to hundreds of specialized MCP servers, tailored for specific agent use cases, domains, organizational functions, or teams.

Organizations also need to integrate their own existing MCP servers or open-source MCP servers into their AI workflows. There’s a need for a way to efficiently combine these existing MCP servers—whether custom-built, publicly available, or open source—into a unified interface that AI agents can readily consume and teams can seamlessly share across the organization.

AgentCore Gateway serves as a centralized MCP tool server, providing a unified interface where agents can discover, access, and invoke tools.

Built with native support for MCP, Gateway enables seamless agent-to-tool communication while abstracting away security, infrastructure, and protocol-level complexities. This service provides:

Zero-code MCP tool creation from APIs and AWS Lambda functions

Intelligent tool discovery

Built-in inbound and outbound authorization

Serverless infrastructure for MCP servers

Your agents running in AgentCore Runtime communicate with AgentCore Gateway via the MCP protocol. The Gateway acts as a central hub connecting to various targets: MCP servers (hosted on Agentcore Runtime or public MCP servers), OpenAPI/REST APIs, AWS Lambda functions, and your custom business services.

References

Move your AI agents from proof of concept to production with Amazon Bedrock AgentCore - This blog mirrors the real-world path from PoC to production, demonstrating how AgentCore services work together.

Transform your MCP architecture: Unite MCP servers through AgentCore Gateway

Getting Started with AgentCore Today

We have explored that AWS MCP servers are productivity tools for internal personas to build end-user products. If you’re building AI agents for your end users and want to get started with AgentCore, there’s an MCP server for AgentCore too!

Add the AgentCore MCP server to your mcp.json config in your AI chat assistants and get started today: Accelerate development with the Amazon Bedrock AgentCore MCP server

Final Thoughts

We’re at an inflection point in how we interact with cloud infrastructure and our enterprise services. The combination of agentic AI and the Model Context Protocol isn’t just making our existing workflows faster—it’s fundamentally changing what’s possible.

The shift from “AI that suggests” to “AI that executes” means we can spend less time on repetitive operations and more time on what matters: solving real business problems, innovating for our end-user customers, and building systems that create value.

Whether you’re just getting started with AWS MCP servers in your local development environment or architecting production-scale agentic systems with AgentCore, the path forward is clear: AI agents augmented with the right context and tools aren’t the future—they’re here now.

Start small. Pick one workflow that frustrates your team. Build a custom agent for it. See what happens. I think you’ll be surprised by how quickly “nice to have” becomes “how did we ever work without this?”

The tools are ready. The design patterns are established. The only question is: what will you build?

And that’s a wrap on another AWSCQ.

Urmila has been a great friend to comsum speaking at our last two Community Summits so this really is a huge and heartfelt thank you from us for another fantastic contribution!

Thank you, Urmila!

Before you go be sure to give our sponsors a click!

AWS Community Summit Events and AWSCQ are only possible with their generous support.

Fingers at the ready….

CLICK!

I resonate with what you wrote, Urmila. That friction between AI assistants and actual execution is so reall. I've been a bit skeptical about how fast things would change, but moving from 'AI that suggests' to 'AI that executes' truly feels like the next big leap. Thanks for articulating this so well.